NEWS, EDITORIALS, REFERENCE

Drivers and Relocatable 6502 Code

Some quick housekeeping on my previous post, C64 OS Networking and Chess. I updated the post adding credit to Bo Zimmerman for the creation of the Zimodem firmware. And I had a chat with him about what I'd written. Turns out there are a couple of pieces of good news which I did not know.

The Zimodem firmware is entirely open source, in the update I linked to its GitHub Repository. But what I learned is that there is nothing particular to the C64NET hardware that the Zimodem firmware requires. What this means is that if you purchased a WiFi modem other than the C64NET, and you want to have access to this more advanced feature set, you can likely update the firmware on your existing modem.

Any wifi modem that uses either the ESP8266 or ESP32 or NodeMCU is likely to take the Zimodem firmware just fine. In fact, I got a Strikelink modem at one point and immediately blasted over it with my own firmware. Bo Zimmerman — 2019

For anyone who might ask, "Well then what's the point in getting the C64NET hardware itself?" It has some advantages over the other hardware as well. Most are powered directly off the C64's 5V lines. This can put stress on the power supply as it wasn't designed to drive the extra load. C64NET gets its power from the user port's 9V lines which it converts to 5V on the board. Additionally, the hardware handshaking lines, RTS and CTS are wired up on C64NET and are not always on other WiFi modems.

One more piece of good news. I said I was hopeful that we'd be able to get Zimodem's feature set on a highspeed RS-232 modem, as that would be the ultimate combination. Well, there are two options for this. It is likely that you could update the firmware on, say, the WiModem232. Or even easier yet, electronicsisfun.com has also made the GuruModem.

An early design. It's available now, but may look slightly different.The GuruModem is a highspeed modem that connects over standard RS-232, so it can be connected to a C64 via GLINK232 or Turbo232, and can transfer data at up to 230Kbps! It is also based upon the Zimodem firmware and supports all of its features and commands, plus has some additional features that are enabled in the firmware's codebase when it targets the GuruModem's slightly larger and more capable microcontroller. If you ask me, this is the gold standard for high speed networking on the C64. I am so excited to get it working and supported in C64 OS, and to see what our old brown friend can accomplish.

Now on to the show, a technique for making relocatable code on 6502.

Relocatable 6502 Code

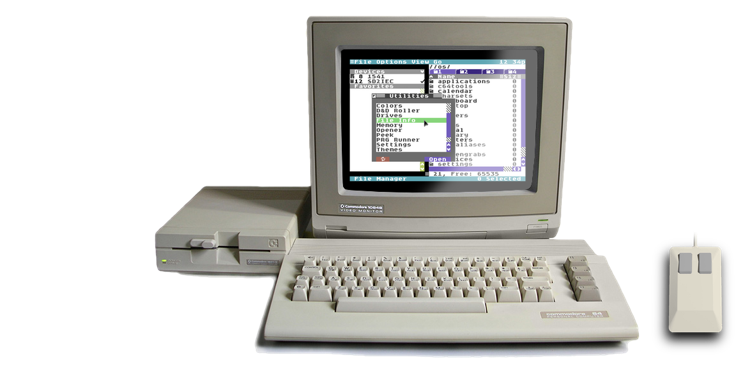

In February 2017 I wrote a short post called Memory Managed Loader. It's still worth a read, but I must warn you that at that early stage some of the things I said have not aged well. The memory map of C64 OS has changed a fair bit, the memory manager itself is quite a bit more sophisticated, featuring true malloc/free in addition to the page allocator, and C64 OS Utilities have since become a thing.

In the above linked post about loading, the focus was on how to coordinate an application's code with the memory page allocator. In C64 OS, only one application may be running at a time, in addition to one utility. The utility though runs from behind the KERNAL, which is space that isn't ever dynamically allocated because of its tricky use. And the application's code doesn't get relocated, it is always assembled to $0900.1

The only thing that could be relocated then was non-executable data. Examples might include: The menu system's structured data. User document data, such as a text file. Bitmap image data. Or, in a more specific application, like Chess, the data from a previously saved game, and so on. In each of these examples, the size of the data is computed and then a contiguous chunk of memory of that size or slightly bigger is requested from the memory manager. The pages allocated are marked as used and the address to the start of the block is returned. Then the data is simply loaded into that area. It isn't any more complicated than that, because the nature of this sort of data is such that it doesn't need to be anywhere specific to work.

This is not the case for 6502 executable code. You cannot simply allocate some space somewhere, load some code into that space and then start running it. Let's talk about why that is.

Absolute Addressing

In a nutshell, code can't just be loaded to anywhere because of absolute addressing. Some part of the code refers to some other memory location by using an absolute address. Let's say your code is assembled to $0801. But a few lines in there is a JSR to a routine that, when assembled fell at $0850. The hard physical address, the bytes "50 08"2 appears in the code following the JSR instruction. If we were to simply load the code starting at $0901 then the start of that subroutine would now be found at $0950, but the argument following the JSR is still $0850. When the JSR is executed, the CPU will jump to $0850, but who knows what it will find there and a crash is virtually guaranteed.

It's easy to see how JMPs and JSRs are a problem, because they always take an absolute address, even an indirect JMP takes an absolute address for where to find the vector to jump through. If you then start to think on this problem a little bit you might recall that branches are relative, not absolute. And you might suppose that the solution then is to use relative branches instead of absolute JMP and JSR. Unfortunately, this is totally inadequate. The solution to making relocatable code cannot be, "Oh that's easy, just don't use two of the most important instructions in the entire set!"

The problem is much deeper than that. Many more instructions have an absolute mode and they are all important in the writing of a routine. We would be severely hampered if we tried to avoid them all. You wouldn't be writing 6502 anymore, but some tiny subset of 6502. For example, all the bitwise instructions, AND, ASL, EOR, LSR, ORA, ROL, and ROR have an absolute mode. All of the comparison instructions, CMP, CPY, CPX and BIT have an absolute mode. The math instructions ADC and SBC, and the memory instructions LDA, LDX, LDY, STA, STX, STY, INC and DEC all have absolute modes. And JSR and JMP. That's around 40% of the instruction set.

It's not just a matter of restraining yourself. For one example out of many, there is only one way to do a CMP, compare the accumulator with something, without the something requiring some absolute address. You can compare to an immediate value, aka a hardcoded static value. All other compares, including the ability to index, require an absolute address. Sometimes that address is in zero page, but it's still absolute in the nominal sense. It's a fixed address you choose at assemble time. The same problem exists with LDA, loading a value into the accumulator. There is just no way around it, you cannot avoid using absolute addresses.

Changing Absolute Addresses

What you want is, at load time, to change the code where absolute addresses are used so they point to the new address. In other words, if you assemble your program to $0801, and within that code there is an absolute reference to $0850, then when you relocate the program to $0901 that the reference to $0850 should be changed to $0950. Now, if every reference like that could be found and updated, the program would run from the new location. So how hard can that be?

It's actually pretty tricky. There are a lot of little pitfalls you don't think about up front, that become apparent only after you get into the weeds.

The main problem is knowing what is an absolute address. I mean, changing an $08 to an $09 isn't just a straightforward search and replace, because any given $08 could also be the relative distance of a branch instruction, or it could be an instance of the PHP (PusH Processor status) instruction. It could be the low-byte of an absolute address. Or it could also be any other $08 that is being used as data in the program, such as a loop counter.

Somehow you need to be able to identify the instructions that are being used in an absolute mode in order to know that the following bytes are an address, because they're the argument of that instruction. To do this, let's only take LDA for example. LDA Absolute, LDA Absolute,X and LDA Absolute,Y have the opcodes $AD, $BD, $B9 respectively. Okay, so that means we need to find all the instances of $AD, $BD and $B9 then? But the same problem exists looking for these. How do you know that a given $AD isn't some address's low byte? Or a relative branch offset, or a byte of ASCII? You can't know that unless you follow the instructions of the program, knowing how many bytes long each instruction and its arguments are.

If we start at the top, we get the first byte and look it up in a list of opcodes. That table tells us how many bytes of arguments it takes, 0, 1 or 2. If it's 2 bytes long, it's a 16-bit address, so it's probably absolute. Otherwise, we could skip the arguments and find ourselves at the next opcode, and just carry on doing that until the end. Right? Wrong, totally wrong. Programmers can, and very often do, store variable data in between routines. It's common for a routine's variables to appear at the end of its code. That means, after any JMP, or any branch (because it could be, by the intention of the programmer, used to branch always), or any RTS, the following byte might not actually be code at all. It could be data.

One way around that would be to trace the program. Okay, so how do we follow the trace of a program? Well, we could assume that the first byte is executable code and not data, and then we could literally step through the code, recursively following its subroutines and all of its possible branches. This would eventually encounter all the areas of the code, and skip the areas of data, probably, though there might be other pitfalls if we got into the weeds on that. But to do this, if it isn't obvious, would require a huge amount of time and code to accomplish. A PC analyzing some 6502 code could do it, but we won't be able to do this at runtime, on the C64 itself.

But even if we could—let's just imagine we can—not every absolute address needs to be changed. Some absolute addresses refer to legitimately absolutely stored data. Such as when a routine loads a variable from the operating system's workspace memory. And sometimes a JSR to an absolute address will actually be to a routine provided by the OS. Those addresses are going to stay the same no matter where you relocate your own routines. Maybe there is a way around this. Such as, addresses below or above the range that your relocatable code was originally supposed to fall into ($0801 to say $0AFF), anything below $0801 is probably a workspace memory address, and any address above $0AFF is probably a system routine.

If it were even possible to recursively trace the whole program at runtime, there is still an awful lot of assumptions and guesswork. And as Groepaz once pointed out to me on IRC, you can throw all of this to the wind if the programmer is using certain self-modifying code techniques. Because with self modification, data that the tracer would skip can become absolute addresses. In short, it's a total clusterf__k.

I had an idea

I had an idea. I don't need to relocate some demo coder's insane-genius, circuitous, self-modifying routine. What I need is the ability to relocated chunks of code that have a limited scope. Let me give you the real world example I was wrestling with: Screen capture.

I wanted C64 OS to have a screen grab feature, triggerable with a global keyboard shortcut. But this code is really superfluous. I can't sacrifice the 100 to 150 bytes or so for some feature that some people will never use. Yeah, yeah, it's only 150 bytes. But it's the principle of the thing. What about the next 10 superfluous features that are 200 bytes each. We can't permanently flush 2K down the superfluous-feature-toilet. This is the perfect candidate for relocation. A short routine, that could be loaded on command, relocated to any available place, (and what is free will depend on what's going on at runtime,) then execute, do its job, write the contents of the screen to a file, and then get the hell out of there. It's perfect.

The idea started simple. If the relocatable code can fit in just one page of memory, 256 bytes, then it could fit within any page that the memory page allocator can return. But better yet, all of the absolute addresses that need to be updated will be pointed to within that page. Which means the value to overwrite them with is just the page number of the freshly allocated page. How many of these absolute references could there be? 5, 10, 20 might be the upper limit. All we have to do is know where those 10 or so bytes are. But, we also know that they fall within that page, so they can all be specified with just a single byte offset each.

The idea then was for a relocatable 1-page binary to start with a table of offsets. Each byte in the table is the offset within that page of where an absolute address's high byte is found. The table ends with a 0 byte, and the code begins immediately after the table. The loader allocates a page, reads the binary into that page. Then loops over the table and writes the allocated page number into that very page, indexed with the bytes from the table. This is easy to do because you can loop over the table with one index register, and you can load the table value into the other index register, to do the actual overwrite with an indexed STA. After hitting the end of the table, the loader could just immediately jump to the first byte following the table to run the relocated program.

I implemented this, and to my pleasure and elation, it worked! It really does work. God I love it when things work. It feels so great to have an idea, try it out and see it working.

The idea hits a wall

First I did the screen grab program. And that worked. Later I worked on the NESTester application which is not only handy for testing the modified NES controllers I make, but also it's a handy testing ground for game controller drivers in C64 OS. I want C64 OS to offer standardized controller drivers, so that games written for it will not poll the hardware directly. But instead read the byte flags set by the driver. This will allow for a driver to properly poll a NES controller's 8-bit shift register, and the game's code will be none the wiser. Okay, so I wrote two relocatable NES controller drivers. One for 2 player, one for 4 player. And that worked too!

So now I had the idea that these relocatable 1-page units of code can work as drivers. An obvious move is to pull the 1351 mouse driver code out of the KERNAL, thus making the KERNAL smaller, and put it into a loadable and unloadable, relocatable driver. But this is where things got tricky.

The first problem is that I flew the 256-byte limit. Landing somewhere closer to 330 bytes. Somehow I had to shave 70 to 80 bytes from the code. But this problem arose because unlike the previous relocatable routines, the mouse driver actually needs two separate routines to be called at different times. The only sensible way to do this is to have a jump table at a predictable place. Normally "a predictable place" means at the start of the code. So that the PAGE+0 is the first routine, PAGE+3 is the second routine, PAGE+6 the third and so on. But this interfered with the relocation table. Nor can the jump table follow the relocation table, because it will vary in length for each driver, and the whole point of a driver is that different variations can be substituted and the calls and the results are the same.

I came up with a solution, but it was so diabolical even I got a sour taste in my mouth implementing it. I had the init routine, the code that gets called once immediately following the relocation table, rewrite the relocation table as a jump table. But the init routine itself has references back to the places in the relocation table, which themselves need to be relocated. It's so loopy, I could barely keep straight how it would work. It turns out, in my tests, I actually could make it work. But it increased the length of the relocation table, plus it added a bunch of code to the init routine to generate the jump table, plus it adds a lot of confusing and difficult to write overhead to the whole affair. It's not exactly what you want to foist on prospective developers.

And in the end, it wouldn't work anyway, because the added overhead pushed past the limit afforded by one page of memory, a mere 256 bytes.

The idea refined

Whenever I need a solution to something tricky, I like to go on long walks to clear my head. On one such walk this idea came to me.

Within a 256-byte page there can only be 256 possible different bytes. And that would only be possible if every byte in the page were unique. In practice this would never happen. If there were even one byte that you used twice, that guarantees that some other byte value never appears anywhere else within the page. And again in practice there would be many unused byte values. Every time you use another LDA, that's one more byte value unused in the page.

All we have to do is assemble the code to a page, whose byte value is otherwise unused. This has the result of guaranteeing that every instance of that byte is in fact the high byte of an absolute address that needs to be relocated.

Somehow, when we load in this chunk of code, we just need to know what that byte is so we can search and replace it. It occurred to me that the byte will be in the load address of the file, but it's kind of a pain to get at that byte. When you use the KERNAL to load the binary to the address of your preference, it overwrites the original load address before you ever have a chance to fetch it. And it takes a fair bit of code to open the file and prefetch the byte, only to close the file and then load it to your page of preference.

Instead, I've made the first byte of the relocatable format the byte that needs to be replaced. This way, the loader loads the code into the allocated page. Then it reads the first byte, and replaces that first byte with the opcode for an absolute JMP ($4C). Then loops over the whole page looking for instances of the relocate byte and replacing them with the allocated page number. And that's it!

The relocation table is reduced to just one byte, and even that isn't wasted as it gets converted into the JMP of the first jump table entry. Additionally, to populate the old relocation table I had to litter the code with labels to bytes needing relocation. Those labels are no longer necessary. It's now very straightforward. You simply write the code just as you would. The start of the code eventually ends up looking something like this:

UPDATE: October 1, 2019

Tweeted out that I'd published this post, and in less than a day I've already gotten an excellent suggestion. Kroc Camen pointed out what I should have noticed. As long as you stipulate that these relocatable code blocks have to start with a jump table, and this was stipulated by the way, because the loadreloc routine replaces the first byte with a JMP instruction, then the relocation byte is already there at byte 2. The relocation byte is just the high byte of the first entry in the jump table.

This means, actually, there is nothing special at all about how you write the code. Just write the code as you would. Start with a jump table, making sure the first jump table entry is to something within the page, or course. And the loadreloc routine can be made a little shorter. Thanks for the feedback!

It's very easy to write the relocatable code. You just write the code as you normally would. After that, you have to figure out what byte isn't used by your code. To do this, I wrote a small BASIC program to do a little static analysis on an assembled version of the code. First, I assemble the code to $1000, then run the BASIC analyzer on it to find a vacant byte, then replace the "$10" of $1000 with an unused byte and reassemble to make the final relocatable.

Let's quickly step through this BASIC program to see what it does. It asks for a filename and saves that as F$. Line 10 then clears out a page in high memory, $C000 to $C0FF, filling it with zeros. Zero indicates the byte is not used.

Line 15 opens the file and reads three bytes, L$, H$ and R$. L and H are the low and high bytes of the load address. And R$ is the first byte of data, that's the relocatable byte we set in the code. Line 18 confirms that the high load byte and the relocation byte are the same. These have to be the same or its a bad relocatable file. It's the high byte that determines what all the absolute addresses within this file will have as a high byte. And the relocate byte needs to match this.

Lines 20 to 40, fetch a byte from the file, convert it to a zero if it's a blank string (because of a peculiarity in BASIC's GET#). Then the value of this byte is used as an offset into $C000 whither a 1 is written. The one indicating that at least one byte of this value is used in the code. If we haven't reached the end of the file, loop back and keep getting more bytes.

Close the file, and print out that scanning is complete. The last step is to loop over all the bytes of $C000 to $C0FF comparing to 0. If it's zero, that byte isn't in use, and gets printed out. Running this BASIC program usually reveals lots and lots of bytes that aren't in use. Any of these can be chosen as the relocation byte. My personal preference is to use a relatively high byte, that's not $FF. The idea is to pick a byte that is unlikely to cause a conflict if you decided to iterate on the code and reassemble it a bunch of times in a row. For the eventual final build, you really should use the BASIC script to analyze it properly again.

Usually a byte like $FC is available. There are several reasons. $FC doesn't correspond with any legal 6502 opcode. It's too big to likely be a branch distance. It doesn't have any corresponding PETSCII value. And it's very near the end of the page, so, it's unlikely to be the low byte of any address either, unless your code goes right to the end. You have to use the BASIC program to check, but in my experience, $FC is usually available.

So to recap, first assemble to $1000 with a relocate byte of $10. Then run the BASIC analyzer. Then, assuming $FC is found to be available, you go back into your source code, change the assemble-to address to $FC00 and pop $FC into the first byte of the code and reassemble.

Limitations

The new refinement on the idea is so much better than the relocation table. There are limitations though, on what can be relocated and what relocated things can work together.

The most obvious limitation is that this relocation scheme is limited to just 1 page of memory. How much can you get done in only 256 bytes? You can get a surprising amount done in such little space. The 1351 mouse driver, was 70 to 80 bytes over the limit. I got it down to within the size limit. It now assembles down to $F6, or 246 bytes. I've got only 9 bytes to spare, but it all fits.

Besides space, there is the limitation of shared zero page and shared workspace memory. The 6502 was really not designed for multitasking. In order to do any indirect indexing at all, the vector has to be in zero page. You cannot therefore simply relocate 20 different small one-page programs and let them run wild at the same time.

My solution to this is that despite the fact that you can load and unload the programmatic implementation of the drivers, their ability to plug into the system is there all the time. Let me give you an example. There are 4 bytes in workspace memory that are designated as the 4 game controller status bytes.

| Port | Address | Hardware |

|---|---|---|

| Control Port 1 | $0304 | Built in |

| Control Port 2 | $0305 | Built in |

| Control Port 3 | $0306 | Optional |

| Control Port 4 | $0307 | Optional |

Those four addresses are designated as the 4 controller ports, all the time, even if there is no game controller driver loaded in. If you're writing a game, you can poll those 4 bytes. Each byte has 8-bits used to represent the 8 buttons listed below. If you are a game that supports 4 players, you don't worry about how these 4 controller status bytes gets populated. You just poll the 4 bytes. At a different level the user can choose which four player driver to install. But all four-player drivers, no matter what hardware they support and how they acquire their data, populate these four status bytes.

Besides the standard four player user port adapter, a 4-player driver could be written that allows the two additional players by using keys on the keyboard. W,A,S,D plus C and V for player 3, and I,J,K,L plus two other keys for fire buttons for player 4. The point is that the game shouldn't care what is populating those controller status bytes. But the second point is that the system itself has those 4 status bytes set aside. If a four player game is being played while a two player driver is installed, then the game doesn't know that either, and merely polls that none of the buttons on Controller 3 or 4 are being pushed.

| Bit | Number | Button |

|---|---|---|

| %00000001 | 1 | Up |

| %00000010 | 2 | Down |

| %00000100 | 4 | Left |

| %00001000 | 8 | Right |

| %00010000 | 16 | Fire 1 |

| %00100000 | 32 | Fire 2 |

| %01000000 | 64 | Select |

| %10000000 | 128 | Start |

Or take the 1351 mouse driver, for example. It reads its acceleration value from a byte in workspace memory, $0290, and its double click delay value from another byte, $0288. And when it writes its mouse movements, it updates the cursor position at $41/$42 for the X axis and at $43/$44 for the Y axis. It writes its button status to $F2.

It doesn't matter what other input driver there is, they will all write the changes of mouse position to $41/$42,$43/$44, and the status of their buttons to $F2. After extracting the 1351 mouse driver code out of the KERNAL and putting it into a relocatable driver, I wrote a joystick driver. Assembled twice, to two different drivers, one for port 1 and another for port 2. It writes the mouse position changes and button status to the same places. And it reads the acceleration and the double click delay from the same addresses as the mouse.

The C64 OS Mouse utility, can be used to tweak the variables to set double click delay and acceleration, along with the mouse pointer sprite and colors, and even the driver to use.

Installing a driver

Just as the drivers get their configuration variables from known and dedicated workspace memory addresses, somehow the system needs to know whether a given driver is installed, where it is installed, and when to call it. For this, I'm dedicating a block of about 8 bytes (tentatively $0801 to $0809) as page indicators for up to 8 drivers. Four of those are already assigned, leaving 4 more for future expansion. Input driver, game controller driver, network physical layer driver, network command layer driver. After that, maybe a printer driver. I'm not sure what else there is. Maybe a digital audio driver. Maybe a keyboard driver.

When a driver is loaded, the relocatable loader returns the page number it was installed to. This page number is then written into the corresponding address for that driver type. For example, the booter reads the system config files, pulls out the name of the input driver to install at boot up. It calls the relocatable loader, and gets back the page where it was installed. Then writes this byte to the constant IDRVPG (input driver page), defined as $0801. The booter also initializes JDRVPG (joystick driver page), defined as $0802, to 0.

The KERNAL's input module has a routine called polldevices. The main interrupt service routine calls polldevices as one of its standard steps. Polldevices has support for all three types of input, input driver (aka mouse), controller driver (aka 2 or 4 joysticks), and keyboard, which is currently hardcoded into the input module. Polldevices then checks if there is an input driver by loading IDRVPG. If it's zero, it moves on. If it's set, it calls into it. Then it does the same for the controllers.

Uninstalling the mouse driver, then, is as easy as:

How about installing a driver at runtime, rather than boot time? This is actually similarly easy. The relocatable loader takes a RegPtr (X/Y Lo/Hi pointer) to a C64 OS file reference. A file reference is exactly one page of page aligned memory. The file reference must actually refer to a relocatable binary. This part is kinda cool, the relocatable loader loads the binary into memory, overtop of the file reference being used to load it. And it returns the page number for convenience. You then write that page number into the corresponding driver page byte. Like this:

I'm not showing here how the file reference gets assembled. However, there is a good chance that the file reference will actually be assembled for you by the C64 OS File utility that is used to pick and open the driver. Or, alternatively, it could be opened and deserialized from a file that previously saved a serialized version of the file reference.

Options for the future

At the moment I'm only supporting 1-page relocatable binaries. But, it would be possible to use the same technique to expand this to more than 1 page.

The process would be exactly the same, after writing your code and assembling it to the dummy location of, say, $1000, you'd run the BASIC analyzer. But this time, instead of looking for any single unused byte, you'd look for any two unused consecutive bytes. Then assemble the program to the first of those two. All instances of both of those bytes are then to be substituted with the new page location and the new page location plus one.

For instance, you write your code, and it ends up being 435 bytes long. More than one page, but not more than two. Assemble to $1000, analyze, and discover that bytes $FC and $FD are both available. Reassemble to $FC, with $FC as the relocation byte. The loader would have to be updated to allow taking an optional number of consecutive pages. Let's say it gets loaded from $9200 to $93FF. $92 is returned. Then it replaces all instances of $FC with $92, and all instances of $FD with $93. And, everything should work.

This general technique should work up to any number of pages, except, obviously, the more pages get added and the more code written, the lower the probability that there will be that number of consecutive unused bytes. But, it would probably work for 2, 3, or 4 pages. You can pack a lot in to 256 bytes. And you can pack more than you'd think into a few consecutive pages.

That's all for now. Enjoy your C64!

- Note that in the earlier post the default loading address was $0801. It's now $0900 and is likely to stay there. [↩]

- The 6502 is little endian, recall, so the two bytes come in the order least significant byte first. [↩]

Do you like what you see?

You've just read one of my high-quality, long-form, weblog posts, for free! First, thank you for your interest, it makes producing this content feel worthwhile. I love to hear your input and feedback in the forums below. And I do my best to answer every question.

I'm creating C64 OS and documenting my progress along the way, to give something to you and contribute to the Commodore community. Please consider purchasing one of the items I am currently offering or making a small donation, to help me continue to bring you updates, in-depth technical discussions and programming reference. Your generous support is greatly appreciated.

Greg Naçu — C64OS.com