NEWS, EDITORIALS, REFERENCE

Base Conversion in 6502 (1/2)

I'm working my way through figuring out how to build a widget toolkit for a text-based UI that borrows a lot from the way hierarchical widget toolkits work in graphical user interfaces. But, it's a huge mountain to climb. And I don't want to let the blog go without any development love for too long.

In my post about implementing factorial, from absolute scratch, in 6502, the issue came up at the end of converting the binary result of the math into something that would be human readable. There I included a very simple routine that converts the number into hexadecimal. I chose hex because it is so simple. You just split the byte into two bytes where each has a range from 0 to 15. To grab the most significant 4-bits you shift them right 4 times. And to isolate the least significant 4-bits you can just mask out the upper 4-bits. And then, those two resultant values can be used as an index into a small lookup table of, say, PETSCII string values.

But how do you convert into something other than hexadecimal? What if you want convert a binary integer into decimal? Or vice versa? That's quite a different story.

Fortunately we have Garth Wilson of Wilson Mines Co. and his wonderful website about 6502 programming, http://wilsonminesco.com, to rely on. I found the following descriptions on his page about Large Look-up Tables for Hyperfast, Accurate, 16-Bit Scaled-Integer Math.

The whole article is interesting, although it's quite a bit beyond my level of experience. There is however a particularly useful section listed in the table of contents as, Easy conversion to and from any base. His description is written out in prose, but in my experimenting with the idea it seems to work well. So I thought I'd expound on it with a bit more practical detail here.

Convert a string number to HEX

Actually, what he refers to as hex is in my reckoning actually just binary, but we get to type those binary numbers in hexadecimal notation in our assembler. The difference between them is the following, if you have a single byte:

"1" == 0011 0001, or 0x31 (Petscii "1") 1 == 0000 0001, or 0x01 "a" == 0100 0001, or 0x41 (Petscii "a") $a == 0000 1010, or 0x0a

The question is, how do you convert a string of petscii digits into the equivalent conceptual value as the number counted in binary. Here's what Garth Wilson says about it:

For inputting numbers from a text string (e.g. typed in from a keyboard), initialize a number as 0 to build on, then take the first digit in the string and add its value to the hex number you're building. Continue until you're out of digits, each time multiplying the build by the number in variable BASE and then adding the new digit's value to the build.

Let me convert that into pseudo code for you.

Define BASE. Initialize number to 0. loop: Multiply number by BASE. Read first digit from string. Add its value to number. If string has more digits, go to loop. End.

Next let's step through the pseudo code with an example number, seeing how it converts one step at a time.

BASE = $A (10 in decimal) "43156" -------------------- loop 0: $0 * BASE == $0 "4" << "3156" $0 + $4 == $4 loop 1: $4 * BASE == $28 "3" << "156" $28 + $3 == $2B loop 2: $2B * BASE == $1AE "1" << "56" $1AE + $1 == $1AF loop 3: $1AF * BASE == $10D6 "5" << "6" $10D6 + $5 == $10DB loop 4: $10DB * BASE == $A88E "6" << "" $A88E + $6 == $A894 -------------------- $A894

Before describing what I did above, let's just use our calculator to confirm that $A894 is equal to 43156. And, it is.

So, we begin be defining BASE as $A. This is base 10, because that's the base that is used in the string input. If the string input were actually in octal, well then BASE should be defined as $8 and all the intermediate results would change.

Between the two horizontal rules, I've labelled 5 loop iterations (0 through 4). That's because the original input string contained 5 digits. Each loop iteration starts by multiplying our running total by BASE. In the zeroth iteration, because the running total started as 0, multiplying it by BASE doesn't change its value.

In each loop iteration we pull one digit off the left hand side of the string. That is, you are processing the most significant digit first. That "W" << "XYZ" is just to show that "W" has been pulled off the left side of the string, leaving behind only "XYZ" in the string, to be dealt with next iteration.

The single digit that is pulled off the string has to be converted to an integer. This isn't shown here, but it's super easy. If the input string is a decimal value in petscii, then using a PETSCII table we can see that "0" is $30, "1" is $31, "2" is $32, etc. So we just have to subtract $30 from the single character to get its integer value. That integer value is added to the running total. If the remaining string still has any digits left over, then we do another loop iteration.

In a real implementation we'll need to use a multiplication routine too, since the 6502 doesn't have instructions for multiplication. But we had a good 16-bit multiplication routine in the post about implementing factorial.

We'll also have to worry about precision here. If we use 16-bit multiplication and 16-bit addition inside the loop, it will limit the maximum size of integer that can be parsed out of a string representation of a number, to 65535.

String number to HEX Implementation

Here's a quick little implemention of the above. Works exactly as expected.

So, how does this work? Let's walk through it a line at a time. I'm using a very similar 16-bit multiplication routine to the one used in Implementing Factorial, with a small change. I removed the code that preserves multiplier, because I'm going to be clobbering multiplier with product immediately after the multiplication. However, for more info on this routine and where I got it you can read about it in the aformentioned post.

The initial constants are workspace variables for the multiplication routine. Then we declare that base is 10, because the string holds a number that's in base 10. And we define petoffset as $30, because I hate magic numbers. This is the offset from 0 as an integer to "0" the petscii character.

We'll start the code where a basic program starts, $0801, and follow it with the BASIC prelude which will SYS to the start of the real code. Read about this in the aforementioned post too.

Now, we need a running total, and we need to multiple that running total in each loop iteration by base. I've decided that "multiplier" shall serve as the running total. During the multiplication subroutine multiplier is corrupted, but the result is put into product. And multiplicand is left untouched. Multiplicand therefore should be base. So lines 18 to 21 copy base the constant, as a 16-bit number into multiplicand. And lines 25 to 27 initialize multiplier and the X register to zero.

The loop starts on line 29, where we read one byte from the string at index X. This will be the leftmost and most significant digit. Lines 30 and 31 check to see if this byte is 0. If it is, that's the null terminator on the string, the whole process is complete and it branches to done. If it got a legitimate digit it carries on, and increments X for the next trip around the loop.

The mult16 routine will destroy the accumulator. So first we back that up to the stack. JSR to mult16. This has corrupted multiplier, but the result is in product. We just want product to be put back into our running total, so we copy it into the multiplier which didn't have anything meaningful anyway. And then restore the accumulator with the digit from the string.

Lines 43 and 44 convert that single digit to an integer by subtracting "petoffset". And then lines 46 to 51 do a simple 16-bit add to add this digit to the running total. And line 53 restarts the loop to move on to the next digit. And that's it.

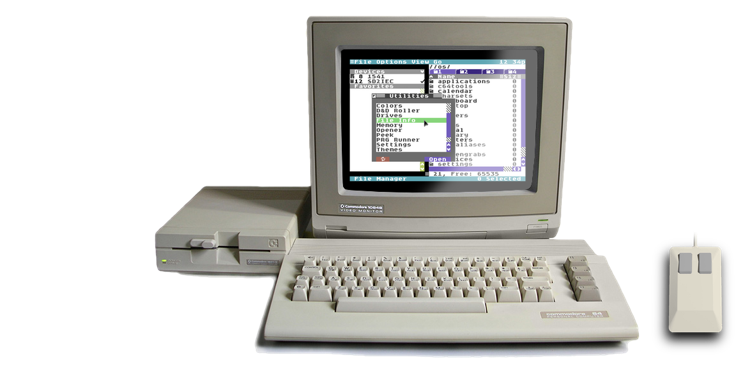

How do we check that it worked? Run it. And the final result should be stored in multiplier which is at $4e,$4f, or 78 and 79. The screenshot shows that if we print out the peek of 78 and the peek of 79 we get 148 and 168 respectively. (Of course, BASIC is converting these byte integers back into strings to be displayed for us, irony.) However, if we stick them in our hex calculator, we see that 148 == $94 and 168 == $A8. Remember that multibyte ints are stored in little endian, or least significant byte first. So, reverse those and we get $A894.

That is indeed the correct answer, according to how I ran the pseudocode by hand. Pretty cool.

I don't want these posts to get too long, so I'll leave conversion the other way, from an int to a string, for part 2 of this post.